TORONTO — Ask Meta Platforms Inc.'s head of artificial intelligence research how the technology could be made safer and she takes inspiration from an unlikely place: the grocery store.

Supermarkets are filled with products that offer key information at a glance, Joelle Pineau says.

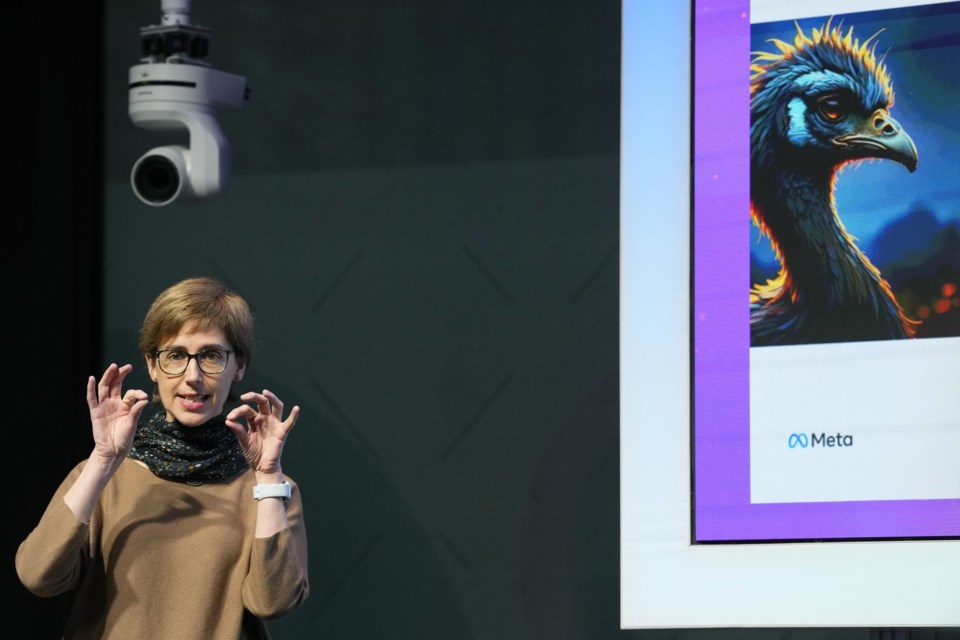

"That list of ingredients allows people to make informed choices about whether they want to eat that food or not," explains Pineau, who is due to speak at the Elevate tech conference in Toronto this week.

"But right now, in AI, we seem to have a rather paternalistic approach to (transparency), which is let us decide what is the regulation or what everyone should or shouldn't do, rather than have something that empowers people to make choices."

Pineau's reflections on the state of AI come as the globe is awash in chatter about the future of the technology and whether it will cause unemployment, bias and discrimination and even existential risks for humanity.

Governments are working to assess many of these problems as they edge toward AI-specific legislation, which in Canada won't come into effect until at least next year.

Tech companies are keen to be involved in shaping AI guardrails, arguing that any regulations could help protect their users and keeps competitors on an even playing field. Still, they are wary regulation could limit the pace, progress and plans they've made with AI.

Whatever form AI guardrails take on, Pineau wants transparency to be a priority, and she already has an idea about how to make that happen.

She says legislation could require creators to document what information they used to build and develop AI models, their capabilities and, perhaps, some of the results from their risk assessments.

"I don't yet have a very prescriptive point of view of what should or shouldn't be documented, but I do think that is kind of the first step," she says.

Many companies in the AI space are doing this work already but "they're not being transparent about it," she adds.

Research suggests there is plenty of room for improvement.

Stanford University's Institute for Human-Centred AI analyzed how transparent prominent AI models were in May by using 100 indicators including whether companies made use of personal information, disclosed licenses they have for data and took steps to omit copyrighted materials.

The researchers found many models were far from acing the test. Meta's Llama 2 landed a 60 per cent score, Anthropic's Claude 3 got 51 per cent, GPT-4 from OpenAI sat at 49 per cent and Google's Gemini 1.0 Ultra reached 47 per cent.

Pineau, who doubles as a computer science professor at McGill University in Montreal, has similarly found "the culture of transparency is a very different one from one company to the next."

At Meta, which owns Facebook, Instagram and WhatsApp, there has been a commitment to open-source AI models, which typically allow anyone to access, use, modify, and distribute them.

Meta, however, also has an AI search and assistant tool it has rolled out to Facebook and Instagram that it does not let users opt out of.

In contrast, some companies let users opt out of such products or have adopted even more transparency features, but there are plenty who have taken a more lax approach or rebuffed attempts to encourage them to make their models open-source.

A more standardized and transparent approach used by all companies would have two key benefits, Pineau said.

It would build trust and force companies "to do the right work" because they know their actions are going to be scrutinized.

"It's very clear this work is going out there and it's got to be good, so there's a strong incentive to do high-quality work," she said.

"The other thing is if we are that transparent and we get something wrong — and it happens — we're going to learn very quickly and often ... before it gets into (a) product, so it's also a much faster cycle in terms of discovering where we need to do better."

While the average person might not feel excited by the kinds of data she imagines organizations being transparent with, Pineau said it would come in handy for governments, companies and startups trying to use AI.

"Those people are going to have a responsibility for how they use AI and they should have that transparency as they bring it into their own workforce," she said.

This report by The Canadian Press was first published Sept. 30, 2024.

Tara Deschamps, The Canadian Press